| Skip Navigation Links | |

| Exit Print View | |

|

Oracle Solaris Administration: Network Interfaces and Network Virtualization Oracle Solaris 11 Information Library |

| Skip Navigation Links | |

| Exit Print View | |

|

Oracle Solaris Administration: Network Interfaces and Network Virtualization Oracle Solaris 11 Information Library |

1. Overview of the Networking Stack

Network Configuration in This Oracle Solaris Release

The Network Stack in Oracle Solaris

Network Devices and Datalink Names

Administration of Other Link Types

3. NWAM Configuration and Administration (Overview)

4. NWAM Profile Configuration (Tasks)

5. NWAM Profile Administration (Tasks)

6. About the NWAM Graphical User Interface

Part II Datalink and Interface Configuration

7. Using Datalink and Interface Configuration Commands on Profiles

8. Datalink Configuration and Administration

9. Configuring an IP Interface

10. Configuring Wireless Interface Communications on Oracle Solaris

12. Administering Link Aggregations

16. Exchanging Network Connectivity Information With LLDP

Part III Network Virtualization and Resource Management

17. Introducing Network Virtualization and Resource Control (Overview)

Network Virtualization and Virtual Networks

Parts of the Internal Virtual Network

How Data Travels Through a Virtual Network

Who Should Implement Virtual Networks?

How Bandwidth Management and Flow Control Works

Allocating Resource Control and Bandwidth Management on a Network

Observability Features for Network Virtualization and Resource Control

18. Planning for Network Virtualization and Resource Control

19. Configuring Virtual Networks (Tasks)

20. Using Link Protection in Virtualized Environments

21. Managing Network Resources

Resource control is the process of allocating a system's resources in a controlled fashion. Oracle Solaris resource control features enable bandwidth to be shared among the VNICs on a system's virtual network. You can also use resource control features to allocate and manage bandwidth on a physical interface without VNICs and virtual machines. This section introduces the major features of resource control and briefly explains how these features work.

Searchnetworking.com defines bandwidth as “the amount of data that can be carried from one point to another in a given time period (usually a second).” Bandwidth management enables you to assign a portion of the available bandwidth of a physical NIC to a consumer, such as an application or customer. You can control bandwidth on a per- application, per-port, per-protocol, and per-address basis. Bandwidth management assures efficient use of the large amount of bandwidth available from the new GLDv3 network interfaces.

Resource control features enable you implement a series of controls on an interface's available bandwidth. For example, you can set a guarantee of an interface's bandwidth to a particular consumer. That guarantee is the minimum amount of assured bandwidth allocated to the application or enterprise The allocated portion of bandwidth is known as a share. By setting up guarantees, you can allocate enough bandwidth for applications that cannot function properly without a certain amount of bandwidth. For example, streaming media and Voice over IP consume a great deal of bandwidth. You can use the resource control features to guarantee that these two applications have enough bandwidth to successfully run.

You can also set a limit on the share. The limit is the maximum allocation of bandwidth the share can consume. Using limits, you can contain non-critical services from taking away bandwidth from critical services.

Finally, you can prioritize among the various shares allotted to consumers. You can give highest priority to critical traffic, such as heartbeat packets for a cluster, and lower priority for less critical applications.

For example, application service providers (ASPs) can offer customers fee-based levels of service that are based on the bandwidth share that the customer purchases. As part of the service level agreement (SLA), each share is then guaranteed an amount of bandwidth, to not exceed the purchased limit. (For more information on service level agreements, see Implementing Service-Level Agreements in Oracle Solaris Administration: IP Services. Priority controls might be based on different tiers of the SLA, or different prices paid by the SLA customer.

Bandwidth usage is controlled through management of flows. A flow is a stream of packets that all have certain characteristics, such as the port number or destination address. These flows are managed by transport, service, or virtual machine, including zones. Flows cannot exceed the amount of bandwidth that is guaranteed to the application or to the customer's purchased share.

When a VNIC or flow is assigned a guarantee, the VNIC is assured its designated bandwidth even if other flows or VNICs also use the interface. However, assigned guarantees are workable only if they do not exceed the maximum bandwidth of the physical interface.

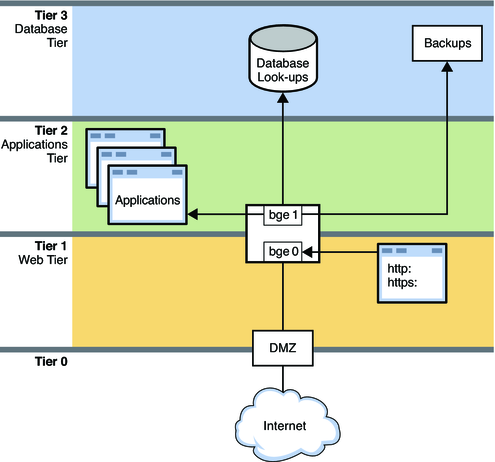

The following figure shows a corporate network topology that uses resource control to manage various applications.

Figure 17-2 Network With Resource Controls in Place

This figure shows a typical network topology that uses resource controls to improve network efficiency and performance. The network does not implement VNICs and containers, such as exclusive zones and virtual machines. However, VNICs and containers could be used on this network for consolidation and other purposes.

The network is divided into four tiers:

Tier 0 is the demilitarized zone (DMZ). This is a small local network that controls access to and from the outside world. Resource control is not used on the systems of the DMZ.

Tier 1 is the web tier and includes two systems. The first system is a proxy server that does filtering. This server has two interfaces, bge0 and bge1. The bge0 link connects the proxy server to the DMZ on Tier 0. The bge0 link also connects the proxy server to the second system, the web server. The http and https services share the bandwidth of the web server with other standard applications. Due to the size and critical nature of web servers, shares of http and https require guarantees and prioritization.

Tier 2 is the applications tier and also includes two systems. The second interface of the proxy server, bge1, provides the connection between the web tier and the applications tier. Through a switch, an applications server connects to bge1 on the proxy server. The applications server requires resource control to manage the shares of bandwidth given to the various applications that are run. Critical applications that need a lot of bandwidth must be given higher guarantees and priorities than smaller, or less critical applications.

Tier 3 is the database tier. The two systems on this tier connect through a switch to the proxy server's bge1 interface. The first system, a database server, needs to issue guarantees and to prioritize the various processes involved in database lookups. The second system is a backup server for the network. This system must consume a great deal of bandwidth during backups. However, backup activities are typically carried out overnight. Using resource controls, you can control when the backup processes have the highest bandwidth guarantees and highest priorities.

Any system administrator who wants to improve a system's efficiency and performance should consider implementing the resource control features. Consolidators can delegate bandwidth shares in combination with VNICs to help balance the load of large servers. Server administrators can use share allocation features to implement SLA's, such as those offered by ASPs. Traditional system administrators can use the bandwidth management features to isolate and prioritize certain applications. Finally, share allocation makes it easy for you to observe bandwidth usage by individual consumers.